What is Screaming Frog?

Screaming Frog is a powerful website crawler and SEO auditing tool that enables users to analyze and optimize their websites for search engines. Widely regarded as a staple in technical SEO, Screaming Frog is used by professionals to identify structural issues, enhance site performance, and improve rankings. Its core capability lies in website crawling, which collects detailed data about a website’s architecture, pages, and links, regardless of its size.

At the heart of Screaming Frog’s functionality is its ability to perform technical SEO audits, uncovering issues like broken links, redirect chains, canonical tag misconfigurations, and crawl errors. It also identifies duplicate content, thin content, and improperly optimized meta titles, meta descriptions, and header tags. The tool supports JavaScript rendering, enabling users to analyze dynamically generated content and ensure it is accessible to both users and search engines.

Screaming Frog offers advanced features for link analysis, including tracking broken links, evaluating internal linking structures, and identifying nofollow attributes. It measures link depth and crawl depth, ensuring that critical content is easily accessible. Additionally, it checks HTTP status codes, such as 404 and 500 errors, and flags redirect issues to maintain a healthy site structure.

Content analysis is another major strength, with tools to evaluate meta tag length, detect missing alt text, assess content word count, and highlight duplicate titles and descriptions. The tool also checks image optimization, ensuring that oversized or uncompressed images do not hinder site performance. For multilingual or international sites, Screaming Frog validates hreflang attributes, ensuring proper targeting of geographic regions.

On the technical front, Screaming Frog performs structured data validation, verifying schema markup to enhance visibility in search engine results. It supports AMP page audits, checks for mobile-friendliness, and integrates PageSpeed Insights to evaluate performance metrics like page load speed. The tool also analyzes robots.txt files, meta robots tags, and secure protocol (HTTPS) implementation, ensuring the site is fully optimized for crawling and indexing.

Customization is a key attribute of Screaming Frog. Users can configure custom crawl settings, apply regex filters, and exclude specific URLs or patterns. The custom extraction feature allows users to retrieve specific elements from the website using CSSPath, XPath, or regex, making it highly adaptable to unique use cases.

Data visualization and reporting capabilities enhance its usability. Screaming Frog creates visual site maps, crawl diagrams, and internal link visualizations, helping users understand site structure and connectivity. Reports can be customized and exported in Excel or CSV format, facilitating offline analysis or client presentations. It also integrates with tools like Google Analytics, Google Search Console, and PageSpeed Insights, consolidating key metrics for a holistic view of site performance.

Screaming Frog excels at identifying and resolving canonicalization issues, optimizing redirects, and improving crawl budget efficiency. It handles dynamic content seamlessly and ensures that pages comply with modern SEO standards. The tool’s anchor text analysis evaluates internal anchor text distribution, while its mobile usability audits ensure responsiveness across devices.

For competitive analysis, Screaming Frog can crawl competitors’ websites, revealing insights into their site structure, backlink opportunities, and keyword usage. Its ability to compare domains and perform content gap analysis helps users identify strategic areas for improvement.

Despite its many advantages, Screaming Frog requires some technical expertise to leverage its full potential. Its learning curve and system resource usage during large-scale crawls may pose challenges for beginners or users with limited computing power. However, extensive documentation, a responsive support team, and active community forums provide ample resources for mastering the tool.

The long-term benefits of Screaming Frog are substantial. By addressing all aspects of technical SEO, content optimization, and link management, it helps users future-proof their websites against evolving search engine algorithms. With features like schema validation, mobile usability audits, and JavaScript crawling, Screaming Frog ensures that websites remain competitive and user-friendly.

A Broad Overview!

Ever wanted to make your website really cool?

Meet Screaming Frog, your website superhero!

This guide is like a friendly chat about how Screaming Frog can help your site be the best it can be, without any tricky tech talk.

Let’s get started on turning your website into a digital masterpiece!

Imagine a superhero checking out every corner of your site—that’s what Screaming Frog does. I’ll guide you on what it finds and how you can use that info to make your site even better.

Think of your website like a library, and Screaming Frog helps organize it. I’ll explain simple tricks to make sure people can find your site easily.

Now, let’s talk about the fun stuff—pictures and videos! We’ll guide you on making them look great and work smoothly on your website.

After its adventure, Screaming Frog leaves you a detailed map (reports) of your website. I’ll help you read it so you can understand what needs attention and what’s already doing well.

Time to put on your digital construction hat!

I’ll show you how to use Screaming Frog’s findings to fix things like broken links and make your website faster.

Just like a superhero checking in on the city regularly, I’ll guide you on how to keep an eye on your website’s health and make small improvements over time.

By the end of this guide, you’ll be the superhero of your website, making it a fantastic place for everyone who visits.

Ready to start the adventure?

Let’s go!

What is Screaming Frog?

Screaming Frog is a powerful and versatile website crawling tool used for search engine optimization (SEO) purposes. It acts like a digital spider, crawling through websites and collecting valuable data about various elements.

The primary goal is to provide website owners, developers, and SEO professionals with insights into their site’s structure, content, and overall health.

Key features of Screaming Frog include:

Find Broken Links:

- Quickly crawl a website to identify broken links and server errors.

- Export errors for efficient fixing or sharing with developers.

Audit Redirects:

- Locate temporary and permanent redirects.

- Identify redirect chains and loops.

- Easily audit URLs during site migration.

Analyse Page Titles & Meta Data:

- During a crawl, analyze page titles and meta descriptions.

- Identify issues like length, duplication, or missing elements.

Discover Duplicate Content:

- Use an algorithm to find exact duplicate URLs.

- Identify partially duplicated elements like page titles or descriptions.

- Uncover low-content pages.

Extract Data with XPath:

- Collect specific data from web pages using various methods.

- Extract social meta tags, additional headings, prices, SKUs, and more.

Review Robots & Directives:

- View URLs blocked by robots.txt, meta robots, or directives.

- Identify canonicals, rel=”next,” and rel=”prev.”

Generate XML Sitemaps:

- Quickly create XML Sitemaps and Image XML Sitemaps.

- Configure URLs with details like last modified, priority, and change frequency.

Integrate with GA, GSC & PSI:

- Connect to Google Analytics, Search Console, and PageSpeed Insights.

- Fetch user and performance data for all crawled URLs.

Crawl JavaScript Websites:

- Render web pages, including dynamic and JavaScript-rich sites.

- Ideal for frameworks like Angular, React, and Vue.js.

Visualise Site Architecture:

- Evaluate internal linking and URL structure.

- Use interactive crawl diagrams and tree graph visualizations.

Schedule Audits:

- Set up scheduled crawls at chosen intervals.

- Automate data export to locations like Google Sheets or via the command line.

Compare Crawls & Staging:

- Track SEO progress and changes between crawls.

- Compare staging against production environments using advanced URL mapping.

Screaming Frog is widely used in the field of SEO to perform audits, identify areas for improvement, and monitor the effectiveness of optimization efforts. Its user-friendly interface and comprehensive insights make it a valuable tool for anyone looking to enhance their website’s performance in search engine results.

Getting Started with Screaming Frog

Starting to make your website better is like going on an adventure. And for this journey, we have a special helper called Screaming Frog.

In this part, we’re going to help you get this tool, set it up on your computer, and make it work just right for what you need.

Think of it as the first steps to make Screaming Frog your website’s superhero!

Download and Installation

Before we get into making your website better, you need a special tool called Screaming Frog. It’s like getting a superhero tool for your website. First, we’ll help you find it and put it on your computer.

Imagine downloading Screaming Frog as grabbing your superhero suit. Here’s how you do it:

Find the Official Website: Visit Screaming Frog’s official website to get the tools your website needs. Just type “Screaming Frog” in your browser and gear up! Or Click here.

Get the Right Version:

Select the suitable version for your computer:

- Windows

- Mac

- Linux

Just like choosing the right fit for your clothing, this ensures everything works smoothly.

Installation Instructions:

Now that you have your superhero suit, let’s put it on your computer:

Start the Installation: Open the superhero suit package and follow the instructions. It’s like putting on your suit step by step.

Key Settings: Sometimes, superhero suits have special settings. We’ll tell you about any important ones so your Screaming Frog works just right.

Basic Setup and Configuration

Now that Screaming Frog is on your computer, let’s get to know it better. It’s like meeting your new helper for the first time. This section helps you get ready to use Screaming Frog to make your website amazing.

Open the Tool: Just like waking up your superhero, we’ll show you how to start Screaming Frog.

Overview of the Interface: Take a quick tour of the buttons and features. It’s like learning what each button on your superhero suit does.

Configuring Basic Settings:

Setting Up the Helper: Every superhero has preferences. We’ll help you set up Screaming Frog just the way you like it. Adjust the speed, choose how it looks, and more.

Basic Parameters: Understand the basic settings, like how fast your superhero tool should work and what it should look for on your website.

Setting Up a New Project:

Start a New Adventure: In Screaming Frog, your website project is like a new adventure. We’ll guide you on how to tell the superhero tool where to go (entering your website’s address) and any special things it should know.

This way, you’ll be all set to start your journey of making your website the best it can be with Screaming Frog!

Crawling Your Website

It is like sending a website detective to check every nook and cranny of your site. Think of it as a digital explorer using a tool called Screaming Frog.

This explorer goes through your website, checking each page and collecting important information. It’s a bit like a superhero searching for clues to make your website work even better.

Initiating a Crawl with Screaming Frog

Now that you have Screaming Frog set up, it’s time to make it explore your website, just like a superhero searching for clues.

This process is called a “crawl.”

I’ll guide you on how to start this exploration.

Step-by-Step Crawl:

Launch the Crawl: Think of it as telling your superhero to start looking around. I’ll show you where to click and what settings to choose.

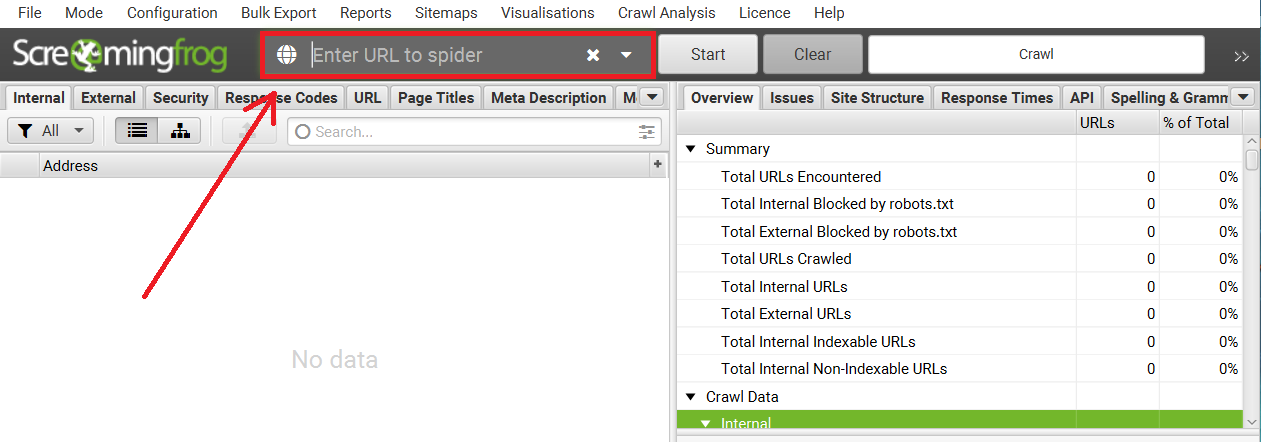

Start with Entering the URL of your Website as given below screenshot.

Wait for the Magic: While Screaming Frog is crawling, it’s like your superhero checking every corner of your website for important information.

Free version of SF gives you option to crawl 200 URLs at a time.

Understanding Crawl Results

After exploring your website, it’s like someone went on a journey and found some interesting things. Now, we’re in the phase of figuring out what these discoveries mean.

Think of it as solving a little puzzle based on what we’ve found.

We want to understand what’s going well on your website and if there are places that might need a bit of attention.

Reviewing the Data

Once Screaming Frog completes its exploration of your website, it compiles a detailed report—a bit like a comprehensive document summarizing its findings.

I’ll guide you through this report, helping you navigate and interpret it.

Reading the Report:

Picture this as going through a detailed document that outlines everything discovered during the website exploration. I’ll assist you in understanding and interpreting this comprehensive overview.

Key Metrics:

Within the report, there are key metrics—important numbers and details that provide insights into how your website is performing.

Discover which pages on your website attracted the most attention. It’s akin to understanding the high-traffic areas of your digital space.

If there are any problems or challenges with your website, the report highlights them. Think of it as finding clues that help you address potential issues and enhance your website.

I will guide each of this step by step below.

Identifying Common Issues and Errors

When the crawl complete, we need to start exploring each finding one by one. I’ll help you spot and tackle common issues your website might be facing.

I’ll show you how to find and understand any errors Screaming Frog discovered. It’s like finding clues to fix things.

Internal Links:

This feature ensures that the links within your website are working correctly, providing a seamless navigation experience for users. It also helps identify and fix any broken internal links.

- Importance: Examines links within your website, ensuring smooth navigation.

- Action: Identifies and fixes broken internal links.

External Links:

This function analyzes the external links on your site for relevance. It checks for broken external links and provides recommendations for fixing them to maintain a healthy link profile.

- Importance: Analyzes links to external sites, verifying relevance.

- Action: Checks for broken external links, ensuring valuable connections.

Security:

The security check examines your website for potential security issues. It identifies threats and suggests solutions to enhance the overall security of your site.

- Importance: Checks for security issues, ensuring user data safety.

- Action: Identifies potential threats and suggests solutions.

Responses Codes:

This feature reviews the responses from your server, addressing errors and redirects. It helps in optimizing server responses for a better user experience.

- Importance: Examines server responses, ensuring proper functionality.

- Action: Addresses server errors and redirects for a seamless user experience.

URLs:

The URL analysis assesses the structure of your website’s URLs, considering their impact on search ranking and user experience. It helps optimize URLs for better visibility.

- Importance: Analyzes URL structure, impacting search ranking and user experience.

- Action: Identifies issues like URL length and structure for optimization.

Blocked URLs:

This function allows you to view and audit URLs that are disallowed by the robots.txt file. Ensuring proper management of blocked URLs is crucial for SEO.

- Importance: Views and audits URLs disallowed by the robots.txt protocol.

- Action: Ensures proper management of URLs according to the robots.txt rules.

Blocked Resources:

Examining blocked resources in rendering mode is essential for understanding how certain elements on your website may be restricted. It aids in optimizing the rendering process.

- Importance: Views and audits resources blocked in rendering mode.

- Action: Ensures resources are accessible for proper rendering.

URL Issues:

This aspect examines various issues related to URLs, such as the presence of non-ASCII characters, underscores, uppercase characters, parameters, and long URLs. Addressing these issues is vital for optimal website performance.

- Importance: Examines issues like non-ASCII characters, underscores, uppercase characters, parameters, or long URLs.

- Action: Identifies and addresses URL-related issues for optimization.

Duplicate Pages:

The tool helps identify exact and near-duplicate pages on your website, ensuring content uniqueness. This is crucial for SEO and avoiding duplicate content issues.

- Importance: Discovers exact and near-duplicate pages using advanced algorithmic checks.

- Action: Ensures content uniqueness and addresses duplication issues.

Page Titles:

The review of page titles is important for search ranking and user engagement. The tool helps identify missing or duplicate page titles that need attention for optimization.

- Importance: Reviews page titles, impacting search ranking and user engagement.

- Action: Identifies missing or duplicate page titles for improvement.

Meta Description:

Examining meta descriptions is critical for influencing search snippets and user clicks. Ensuring well-crafted and unique meta descriptions is part of effective SEO.

- Importance: Examines meta descriptions, influencing search snippets and user clicks.

- Action: Ensures compelling and unique meta descriptions.

Meta Keywords:

Although not widely used by major search engines, the tool checks for meta keywords, which might be relevant for regional search engines.

- Importance: Checks mainly for reference or regional search engines.

- Action: Adheres to modern SEO practices related to meta keywords.

File Size:

Assessing the size of URLs and images is crucial for optimizing website performance. The tool helps identify large files that may impact loading times.

- Importance: Assesses the size of URLs and images.

- Action: Optimizes file sizes for improved performance.

Response Time:

This feature allows you to view how long pages take to respond to requests. Optimizing response times is essential for a positive user experience.

- Importance: Views how long pages take to respond to requests.

- Action: Optimizes elements for faster response times.

Last-Modified Header:

Viewing the last modified date in the HTTP header provides insights into when a page was last updated. This information is important for managing and maintaining content.

- Importance: Views the last modified date in the HTTP header.

- Action: Ensures proper handling of last-modified information.

Crawl Depth:

Understanding how deep a URL is within a website’s architecture is crucial for site structure analysis. It helps optimize URL depth for better organization.

- Importance: Views how deep a URL is within a website’s architecture.

- Action: Optimizes URL structure for better accessibility.

Word Count:

Analyzing the number of words on every page provides insights into content richness. Maintaining an appropriate word count is important for SEO.

- Importance: Analyzes the number of words on every page.

- Action: Ensures content richness and relevance.

H1, H2:

Reviewing header tags (H1, H2) is essential for content organization and SEO. The tool helps identify missing, duplicate, or improperly used header tags.

- Importance: Reviews header tags, organizing content and aiding in SEO.

- Action: Ensures proper usage of header tags for clarity and optimization.

Meta Robots:

Examining directives like index, noindex, follow, nofollow, etc., helps control how search engines crawl and index your content.

- Importance: Views directives like index, noindex, follow, nofollow, noarchive, nosnippet, etc.

- Action: Ensures proper use of meta directives for search engine crawling behavior.

Meta Refresh:

Viewing meta refresh directives helps in understanding page redirection. Proper use of meta refresh is important for effective redirection strategies.

- Importance: Views meta refresh directives, including target page and time delay.

- Action: Ensures proper use of meta refresh for effective page redirection.

Canonicals:

Viewing link elements and canonical HTTP headers helps ensure proper indexing of content. Canonical tags are crucial for avoiding duplicate content issues.

- Importance: Views link elements and canonical HTTP headers.

- Action: Ensures correct implementation of canonical tags for proper indexing.

X-Robots-Tag:

This feature allows you to view directives issued via the HTTP header, providing additional control over how search engines crawl content.

- Importance: Views directives issued via the HTTP header.

- Action: Ensures proper handling of X-Robots-Tag directives.

Pagination:

Examining rel=”next” and rel=”prev” attributes helps optimize pagination for user-friendly navigation.

- Importance: Views rel=“next” and rel=“prev” attributes.

- Action: Optimizes pagination for user-friendly navigation.

Follow & Nofollow:

This function allows you to view meta nofollow and nofollow link attributes, providing insights into how search engines treat specific links.

- Importance: Views meta nofollow and nofollow link attributes.

- Action: Ensures proper use of follow and nofollow attributes.

Redirect Chains:

Discovering redirect chains and loops helps ensure efficient handling of redirects, avoiding potential issues.

- Importance: Discovers redirect chains and loops.

- Action: Ensures efficient handling of redirects.

hreflang Attributes:

Auditing missing confirmation links and checking language codes is crucial for international SEO and proper targeting of audiences.

- Importance: Audits missing confirmation links, inconsistent & incorrect languages codes, non-canonical hreflang, etc.

- Action: Ensures proper implementation of hreflang attributes for international SEO.

Inlinks:

Viewing all pages linking to a URL, along with anchor text and link attributes, helps manage inbound links for enhanced connectivity.

- Importance: Views all pages linking to a URL, anchor text, and link attributes.

- Action: Manages inbound links for enhanced connectivity.

Outlinks:

Viewing all pages a URL links out to, as well as resources, aids in managing outbound links for an effective linking strategy.

- Importance: Views all pages a URL links out to, as well as resources.

- Action: Manages outbound links for effective linking strategy.

Anchor Text:

Viewing all link text and alt text from images with links helps optimize anchor text for improved SEO.

- Importance: Views all link text and alt text from images with links.

- Action: Optimizes anchor text for improved SEO.

Rendering:

Crawling JavaScript frameworks is essential for proper rendering of dynamic content on your website.

- Importance: Crawls JavaScript frameworks like AngularJS and React.

- Action: Ensures proper rendering of dynamic content.

AJAX:

AJAX stands for Asynchronous JavaScript and XML. This feature allows the SEO Spider to select the option to obey Google’s AJAX Crawling Scheme. This scheme is deprecated now, but it used to ensure compatibility with search engine crawlers, making dynamic content rendered by JavaScript accessible and indexable.

- Importance: Selects to obey Google’s now deprecated AJAX Crawling Scheme.

- Action: Ensures compatibility with current SEO standards.

Images:

This feature allows the SEO Spider to view all URLs with image links on a website. Additionally, it enables the management of images for an optimal user experience. It’s crucial for analyzing the image structure and ensuring that images are appropriately Optimized for SEO.

- Importance: Views all URLs with the image link and all images from a given page.

- Action: Manages images for optimal user experience.

User-Agent Switcher:

The User-Agent Switcher feature enables the SEO Spider to crawl a website while posing as different user-agents, including popular search engine bots like Googlebot, Bingbot, Yahoo! Slurp, mobile user-agents, or even a custom user-agent. This is useful for checking how the website appears to different crawlers and devices.

- Importance: Crawls as different user-agents, including Googlebot, Bingbot, Yahoo! Slurp, mobile user-agents, or custom UA.

- Action: Ensures compatibility and visibility across various user agents.

Custom HTTP Headers:

This feature allows the SEO Spider to supply any header value in a request. Headers contain additional information about the request or the server’s response. Customizing these headers can help simulate specific scenarios or conditions during crawling.

- Importance: Supplies any header value in a request, from Accept-Language to cookie.

- Action: Customizes HTTP headers for specific requirements.

Custom Source Code Search:

The Custom Source Code Search feature enables the SEO Spider to find specific elements or code snippets within the source code of a website. It supports searching through the source code using XPath, CSS Path selectors, or regular expressions (regex).

- Importance: Finds anything in the source code of a website using XPath, CSS Path selectors, or regex.

- Action: Extracts specific information from the source code.

Custom Extraction:

Custom Extraction allows the SEO Spider to scrape and extract any data from the HTML of a URL. It supports extracting data using XPath, CSS Path selectors, or regex. This feature is particularly useful for retrieving specific information from web pages.

- Importance: Scrapes any data from the HTML of a URL using XPath, CSS Path selectors, or regex.

- Action: Extracts specific data based on website needs.

Google Analytics Integration:

This feature enables the SEO Spider to connect to the Google Analytics API, allowing the extraction of user and conversion data during a crawl. It enhances data analysis by incorporating insights from Google Analytics.

- Importance: Connects to the Google Analytics API and pulls in user and conversion data during a crawl.

- Action: Enhances data analysis with integrated Google Analytics insights.

Google Search Console Integration:

The Google Search Console Integration feature connects the SEO Spider to the Google Search Analytics and URL Inspection APIs. It allows for the collection of performance and index status data in bulk.

- Importance: Connects to the Google Search Analytics and URL Inspection APIs.

- Action: Collects performance and index status data for comprehensive analysis.

PageSpeed Insights Integration:

This feature connects the SEO Spider to the PageSpeed Insights (PSI) API, providing Lighthouse metrics, speed opportunities, diagnostics, and Chrome User Experience Report (CrUX) data at scale.

- Importance: Connects to the PSI API for Lighthouse metrics, speed opportunities, diagnostics, and Chrome User Experience Report (CrUX) data.

- Action: Enhances analysis with integrated insights into page speed.

External Link Metrics:

External Link Metrics allows the SEO Spider to pull external link metrics from third-party sources like Majestic, Ahrefs, and Moz APIs. This feature assists in conducting content audits or profiling links for improved linking strategy.

- Importance: Pulls external link metrics from Majestic, Ahrefs, and Moz APIs into a crawl.

- Action: Conducts content audits or profiles links for improved linking strategy.

XML Sitemap Generation:

This feature enables the SEO Spider to create XML Sitemaps and Image XML Sitemaps. It offers advanced configuration options over URLs to include, last modified date, priority, and change frequency.

- Importance: Creates an XML sitemap and an image sitemap using the SEO spider.

- Action: Ensures accurate and up-to-date sitemaps for effective search engine indexing.

Custom robots.txt:

The Custom robots.txt feature allows the SEO Spider to download, edit, and test a site’s robots.txt file. It provides customization options to control how search engines crawl and index the website.

- Importance: Downloads, edits, and tests a site’s robots.txt using the new custom robots.txt.

- Action: Customizes robots.txt for specific directives.

Rendered Screen Shots:

Rendered Screen Shots fetch, view, and analyze rendered pages crawled by the SEO Spider. It provides visual insights into how pages are rendered after JavaScript execution.

- Importance: Fetches, views, and analyzes rendered pages crawled.

- Action: Enhances visualization and analysis of rendered content.

Store & View HTML & Rendered HTML:

This feature is essential for analyzing the Document Object Model (DOM) of web pages. It stores and allows viewing of both the raw HTML and the rendered HTML of crawled pages.

- Importance: Essential for analyzing the DOM.

- Action: Stores and views HTML and rendered HTML for in-depth analysis.

AMP Crawling & Validation:

The AMP Crawling & Validation feature allows the SEO Spider to crawl Accelerated Mobile Pages (AMP) URLs and validate them using the official integrated AMP Validator.

- Importance: Crawls AMP URLs and validates them using the official integrated AMP Validator.

- Action: Ensures compatibility and adherence to AMP standards.

XML Sitemap Analysis:

This feature allows the SEO Spider to crawl an XML Sitemap independently or as part of a larger crawl. It helps identify missing, non-indexable, and orphan pages listed in the XML Sitemap.

- Importance: Crawls an XML Sitemap independently or part of a crawl.

- Action: Identifies missing, non-indexable, and orphan pages for optimization.

Visualizations:

Visualizations provide graphical representations of internal linking and URL structure using force-directed diagrams and tree graphs. They enhance understanding of the website’s architecture.

- Importance: Analyzes internal linking and URL structure using crawl and directory tree force-directed diagrams and tree graphs.

- Action: Enhances understanding of website structure for optimization.

Structured Data & Validation:

The Structured Data & Validation feature extracts and validates structured data against Schema.org specifications and Google search features.

- Importance: Extracts & validates structured data against Schema.org specifications and Google search features.

- Action: Ensures correct implementation of structured data for enhanced search features.

Spelling & Grammar:

This feature checks website content for accurate spelling and grammar. It supports over 25 different languages for comprehensive analysis.

- Importance: Spell & grammar check your website in over 25 different languages.

- Action: Enhances content quality with accurate spelling and grammar.

Crawl Comparison:

Crawl Comparison allows the SEO Spider to compare crawl data over time, tracking technical SEO progress and detecting changes in site structure, key elements, and metrics.

- Importance: Compares crawl data to see changes in issues and opportunities.

- Action: Tracks technical SEO progress, compares site structure, and detects changes for ongoing optimization.

By comprehensively reviewing these elements, you’re essentially becoming a digital detective, identifying areas for improvement and ensuring your website operates at its best.

Analyzing SEO Elements

It is like looking at the important parts of your website to make it stand out on the internet. Imagine your website is a book, and SEO (Search Engine Optimization) elements are like the chapters that make it interesting for others to read.

Title Tags and Meta Descriptions

Now that Screaming Frog has explored your website, it’s time to focus on specific elements, like the titles and short descriptions on each page. This is crucial for how your website shows up in search results.

Inspecting Title Tags:

Understanding Titles: We’ll guide you on how to look at your titles, which are like the headlines of each page. It’s crucial for telling search engines and users what your page is about.

Making Them Effective: Learn tips on crafting titles that grab attention and include important keywords.

Analyzing Meta Descriptions:

What’s a Meta Description?: Understand the role of meta descriptions, which are like short blurbs describing your page. We’ll guide you on where to find them.

Crafting Compelling Descriptions: Learn how to make meta descriptions engaging, informative, and enticing for users.

Header Tags and Keyword Usage

Now, let’s zoom in on how your content is structured. It’s like organizing the chapters of a book. We’ll focus on header tags and using the right keywords.

Understanding Header Tags:

Hierarchy in Content: We’ll explain the importance of header tags (like H1, H2) in organizing content. It’s like giving each section of your content a title.

Optimizing for SEO: Learn how using header tags smartly can improve how search engines understand and rank your content.

Smart Keyword Usage:

Choosing the Right Keywords: We’ll guide you on selecting keywords relevant to your content. It’s like picking the key words people might use to find your content.

Placing Keywords Effectively: Learn where to strategically place keywords in your content to improve its visibility to search engines.

URL Structure Optimization

Your website’s address matters! It’s like having a clear address for your house. Let’s look at optimizing your URLs for better SEO.

Crafting SEO-Friendly URLs:

What Makes a Good URL?: Understand the components of a good URL. It’s like making sure your address is easy to read and remember.

Optimizing for Search Engines: Learn how to structure URLs in a way that helps search engines understand your content better.

By diving into these elements, you’ll be optimizing key parts of your website, making it more search-engine-friendly and user-friendly. Ready to fine-tune your website for better visibility? Let’s get started!

Handling Images and Multimedia

It is all about making the visual parts of your website look fantastic and work smoothly. Imagine your website is like a photo album or a cool movie.

I’ll guide you on how to pick the right pictures and make sure they load fast and look great. It’s a bit like choosing the best photos for your album or making sure the scenes in your movie are clear and crisp.

Image Optimization Tips

Now, let’s focus on the visual elements of your website, particularly images. Optimizing them is like making sure they’re in their best shape, both for your visitors and for search engines.

Choosing the Right Format:

Understanding Image Formats: Learn about different image formats (like JPEG, PNG, and GIF) and when to use each. It’s like choosing the right canvas for your artwork.

Balancing Quality and File Size: We’ll guide you on finding the sweet spot between image quality and file size. It’s important for faster loading times and a better user experience.

Optimizing Alt Text:

What is Alt Text?: Understand the role of alt text, which is like a description for your images. We’ll guide you on where to add it.

Making Alt Text Effective: Learn how to craft alt text that’s not only descriptive for accessibility but also includes relevant keywords for SEO.

Multimedia Content Best Practices

Beyond images, multimedia content like videos and audio can enhance your website. Let’s explore how to make the most of these elements.

Optimizing Videos:

Video Format and Compression: Understand the best formats and compression techniques for videos. It’s like choosing the right stage for a video performance.

Adding Descriptive Titles and Captions: We’ll guide you on how to title your videos effectively and include captions for better accessibility and SEO.

Enhancing Audio Elements:

Audio File Types: Learn about different audio file types and when to use them. It’s like choosing the right soundtrack for your website.

Metadata and Descriptions: We’ll guide you on adding metadata and descriptions to your audio files. It’s important for both organization and SEO.

By optimizing your images and multimedia content, you’re not just making your website visually appealing but also ensuring a smoother experience for your visitors. Ready to enhance the visual and auditory aspects of your site? Let’s get started!

Addressing Technical SEO

It is like giving your website a checkup to ensure it’s running smoothly behind the scenes. Think of it as a maintenance session for your online space.

I’ll guide you through the technical side of things—making sure your website’s links are working, pages load quickly, and everything is organized neatly. It’s a bit like making sure all the gears in a clock are ticking perfectly to keep time.

Checking for Broken Links and Redirects

Now, let’s dive into the technical side of your website. Imagine your website as a network of roads; we need to make sure there are no roadblocks.

Identifying Broken Links:

Understanding Broken Links: Broken links are like dead ends on your website. We’ll guide you on how to find and fix them, ensuring a seamless navigation experience for your visitors.

Tools for Checking Links: Introduce you to tools that act like your website’s navigation GPS, helping you identify and fix broken links efficiently.

Implementing Redirects:

What are Redirects?: Redirects are like detour signs on your website roads. We’ll explain what they are and when to use them.

Setting Up Redirects: Learn how to set up redirects to guide visitors from old or changed pages to the right destination, improving user experience and SEO.

Handling Duplicate Content Issues

Imagine your website as a book, and each page is a chapter. Duplicate content is like having the same chapter repeated.

Identifying Duplicate Content:

Understanding Duplicate Content: We’ll guide you on recognizing instances where content is repeated. It’s like finding identical paragraphs in a book.

Solutions for Duplicate Content: Learn techniques to address duplicate content issues, ensuring that every page on your website adds distinct value for both users and search engines.

Optimizing Site Speed

Picture your website as a race car; its speed matters. Slow-loading websites can be frustrating for visitors. This part focuses on optimizing your website for speed, ensuring a swift and enjoyable user experience.

Measuring Site Speed:

Understanding Load Times: Learn how to measure the speed of your website. It’s like checking how fast your race car can go.

Tools for Speed Testing: Introduce you to tools that act as speedometers, helping you identify areas to improve and make your website load faster.

Improving Load Times:

Optimizing Images and Files: We’ll guide you on compressing images and files for faster loading. It’s like making your race car lighter for better speed.

Caching and Browser Optimization: Learn about techniques like caching and browser optimization to speed up how your website loads for repeat visitors.

By addressing these technical aspects, you’re ensuring your website’s roads are clear, its content is unique, and it races smoothly, providing an optimal experience for both users and search engines. Ready to fine-tune the technical side of your website? Let’s get started!

Utilizing Screaming Frog Reports

It is like having a superhero detective share a detailed report about your website. Imagine your website is a city, and Screaming Frog is the detective telling you what’s happening on every street.

I’ll help you understand this report, showing you where your website is doing well and where it might need some attention. It’s a bit like getting a map to improve your city.

Reviewing Generated Reports

After Screaming Frog has crawled through your website, it creates reports summarizing what it discovered. This is like receiving a detailed map after a superhero explored your city.

Let’s understand how to read and make sense of these reports.

Navigating the Reports:

Locating the Reports: We’ll guide you on where to find the reports Screaming Frog generated. It’s like opening the superhero’s detailed map.

Understanding Key Sections: Learn to interpret different sections of the report, such as errors, warnings, and important metrics. It’s akin to deciphering the superhero’s notes about what needs attention.

Extracting Actionable Insights

Reports are not just for reading; they’re your guide to action. In this part, we’ll explore how to turn the insights from the reports into practical steps to improve your website.

Identifying Priorities:

Spotting Critical Issues: We’ll help you identify urgent matters that need attention. It’s like figuring out which parts of the city need immediate superhero intervention.

Understanding Opportunities: Discover hidden opportunities for improvement highlighted in the reports. It’s akin to finding areas where your website can shine even brighter.

By mastering the art of reviewing and extracting insights from Screaming Frog reports, you’re not just getting information; you’re gaining the power to enhance and optimize your website effectively. Ready to transform insights into action? Let’s explore!

Implementing Changes

It is where you become the superhero of your website, making it even more awesome. Think of it like giving your digital space a makeover.

I’ll guide you on using insights from tools like Screaming Frog to make improvements. It’s a bit like rearranging the furniture in your home or adding new decorations to make it more inviting.

Using Screaming Frog Findings to Optimize Content

Now that you have insights from Screaming Frog reports, it’s time to put them into action. This is like taking the superhero’s recommendations from the detailed map and making improvements to your city.

Content Optimization Strategies:

Identifying Content Opportunities: We’ll guide you on finding areas in your content that can be improved. It’s like recognizing spots in the city that could use a makeover.

Keyword Integration: Learn how to strategically integrate important keywords into your content based on Screaming Frog findings. It’s akin to enhancing the city’s landmarks for better visibility.

Making Technical Adjustments Based on the Analysis

Besides content, technical aspects play a crucial role in website performance. Let’s explore how to make the necessary technical adjustments to enhance your website’s functionality.

Addressing Technical Issues:

Fixing Broken Links and Redirects: We’ll guide you on how to repair broken links and set up proper redirects. It’s like ensuring smooth navigation throughout your city.

Optimizing Site Speed: Learn strategies to improve your website’s loading speed. It’s similar to optimizing traffic flow in your city for a better user experience.

By implementing changes based on Screaming Frog findings, you’re actively working towards making your website more effective, just like a city continually evolving for the better. Ready to transform your website landscape? Let’s start making those improvements!

Monitoring and Iterating

It is like being the caretaker of your website, ensuring it stays in top shape over time. Imagine it’s a garden, and you’re the gardener making sure everything grows beautifully.

I’ll guide you on checking your website regularly, just like a gardener tends to plants. It’s a bit like making small improvements, watering the flowers, and keeping everything fresh.

Regularly Crawling and Monitoring Your Website

Website optimization is an ongoing journey, much like keeping an eye on your city’s development. In this part, we’ll explore the importance of regularly checking the health of your website using Screaming Frog.

Scheduling Regular Crawls:

Setting a Crawling Schedule: We’ll guide you on how to schedule regular crawls with Screaming Frog. It’s like having routine city inspections to ensure everything is running smoothly.

Monitoring Changes Over Time: Understand how to track changes in your website over time, spotting improvements or potential issues. It’s akin to observing how your city evolves.

Iterative Optimization for Continuous Improvement

The best cities are those that continuously adapt and improve. Similarly, your website should evolve for better performance. This section focuses on an iterative approach to optimization.

Reviewing and Adapting Strategies:

Analyzing Ongoing Reports: Learn how to analyze new reports generated by Screaming Frog. It’s like staying updated on the latest happenings in your city.

Adjusting Optimization Strategies: Understand the importance of adapting your optimization strategies based on ongoing insights. It’s akin to adjusting city plans to accommodate growth and changing needs.

By incorporating regular monitoring and iterative optimization into your website management, you’re ensuring that your online presence remains dynamic and responsive, much like a city that thrives through constant improvement. Ready to build a website that evolves with the times? Let’s explore the path of continuous enhancement!

Summary of the Topic

Embarking on the journey of website optimization with Screaming Frog is akin to transforming your online space into a well-organized and efficient city. Here are the key points to take away from our exploration:

- Getting Started:

- Obtain Screaming Frog from the official website and install it on your computer.

- Configure the tool to fit your preferences and initiate a new project for your website.

- Crawling Your Website:

- Launch a crawl to let Screaming Frog explore your website thoroughly.

- Understand the crawl results, identifying valuable insights and potential issues.

- Analyzing SEO Elements:

- Focus on key SEO elements like title tags, meta descriptions, header tags, and keywords.

- Optimize URL structures to enhance your website’s search engine visibility.

- Handling Images and Multimedia:

- Optimize images by choosing the right format and crafting effective alt text.

- Enhance multimedia elements such as videos and audio with proper formats and metadata.

- Utilizing Screaming Frog Reports:

- Review generated reports to gain a comprehensive overview of your website’s health.

- Extract actionable insights, identifying priorities and opportunities for improvement.

- Implementing Changes:

- Use Screaming Frog findings to optimize content, focusing on keywords and content opportunities.

- Make necessary technical adjustments, addressing issues like broken links and optimizing site speed.

- Monitoring and Iterating:

- Regularly crawl and monitor your website to stay updated on its performance.

- Adopt an iterative approach, reviewing reports and adjusting strategies for continuous improvement.

In essence, Screaming Frog acts as your superhero sidekick, guiding you through the intricate process of website optimization. By consistently implementing insights, adapting strategies, and embracing a proactive approach, you’re not just optimizing your website; you’re building a digital presence that evolves and thrives over time. So, gear up, explore, and transform your online space into a dynamic and efficient hub for your audience!

Want SEO Consultancy for your project?

If you feel that you are lost in somewhere middle, I am here to help you with this.